Talks on Technology Science (ToTS) and Topics in Privacy (TIP)

Spring 2017

This is the schedule of weekly talks on Technology Science from expert researchers, public interest groups, and others on the social impact of technology and its unforseen consequences.

Join us on most Friday mornings 10:30 AM - 12 PM at CGIS Knafel, Room K354 (1737 Cambridge St, Cambridge, MA).

| Date | Speakers | Topic |

| 1/27 | Michael Sulmeyer and Ben Buchanan, Harvard University | Hacking the Election: The Cybersecurity Threats to Votes and Voters |

| 2/3 | None | None |

| 2/10 | Arvind Narayanan, Princeton University | How Are You Tracked Online? |

| 2/17 (K262) | Alvaro Bedoya, Georgetown University | The Perpetual Line-Up: Unregulated Use of Facial Recognition by the Police in America |

| 2/24 | Samer Hassan, Berkman Klein Center | Law Is Code: Blockchain as a regulatory technology |

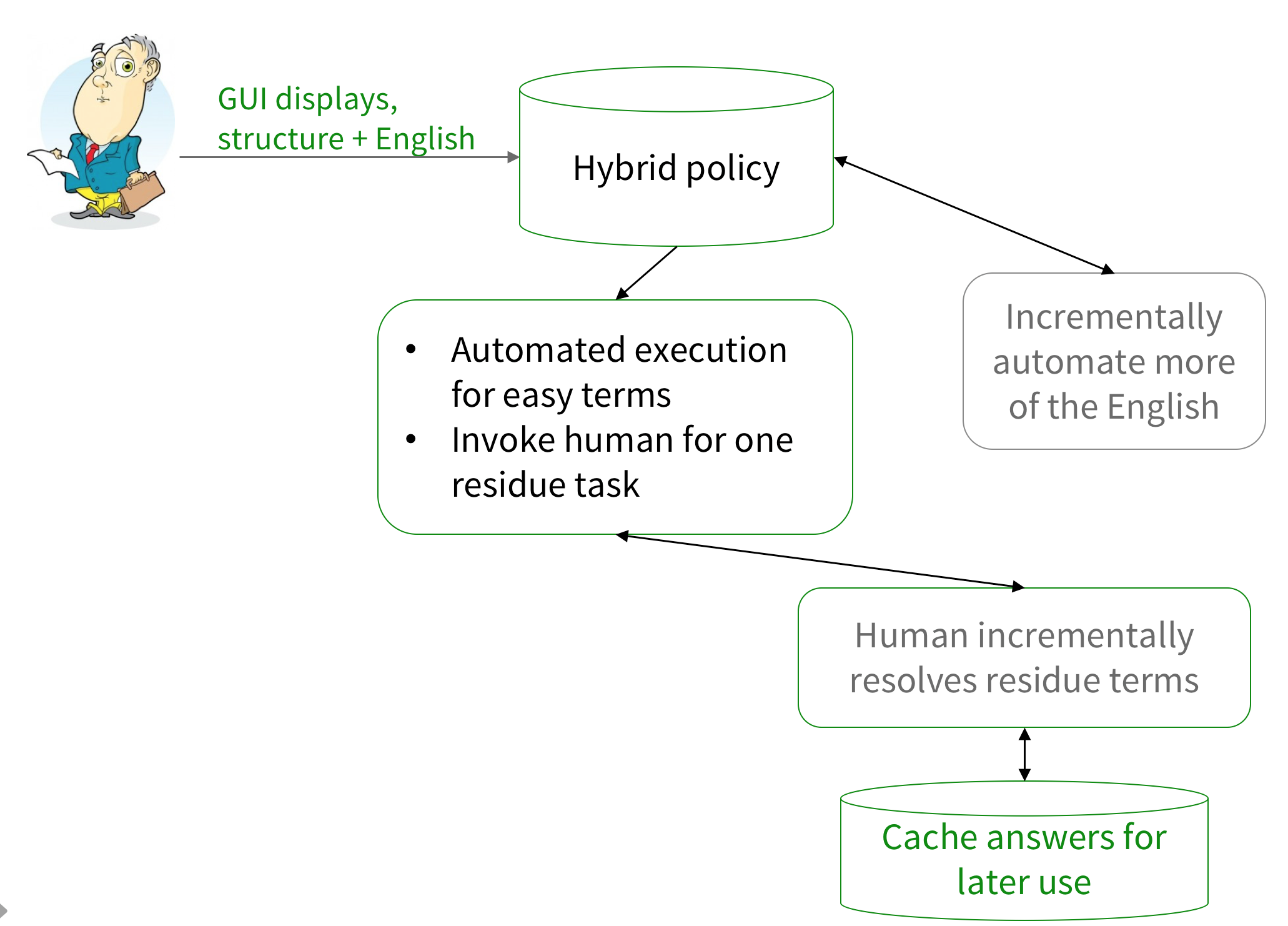

| 3/3 | Arnon Rosenthal, MITRE | Human-in-the-loop rulesets for legalese, alerts, and access control |

| 3/10 | No talk | No talk - Spring Recess |

| 3/17 | No talk | No talk - Spring Recess |

| 3/24 | Julia Angwin, ProPublica | Algorithmic Accountability |

| 3/31 | None | None |

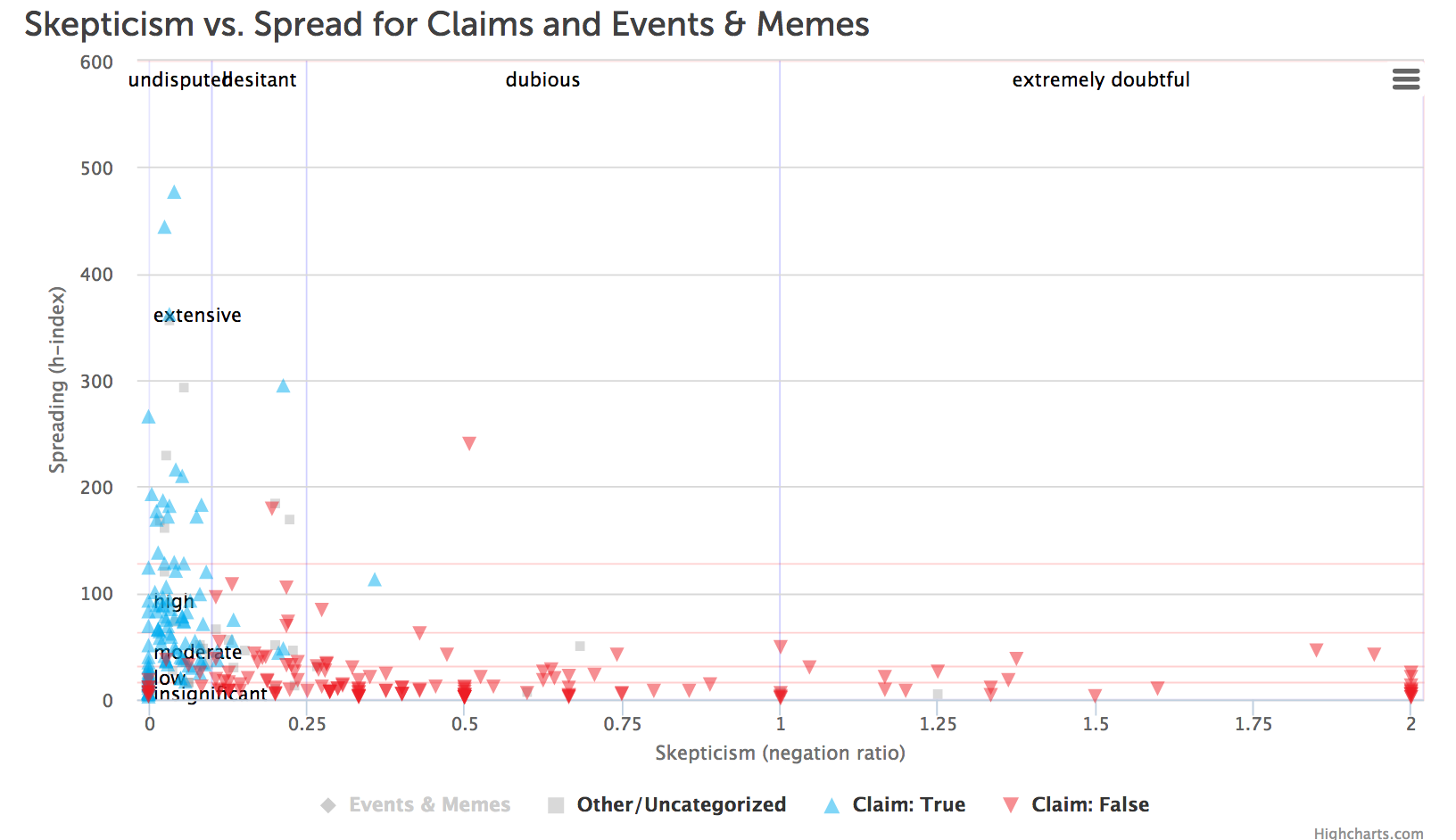

| 4/7 | J. Nathan Matias, MIT | Reducing the Spread of Fake News: Coordinating Humans to Nudge AI Behavior |

| 4/14 | P. Takis Metaxas, Wellesley College | Propaganda, Misinformation, "Fake News", and what to do about it |

| 4/21 | Ben Green, Harvard University | Open Data Privacy Playbook |

| 4/28 | Carrie Goldberg, attorney | The Weaponization of Tech |

| 5/5 | None | None |

| 5/12 | Paula Kift, Palantir | Ethical Engineering |

Descriptions

| 1/27 | Hacking the Election: The Cybersecurity Threats to Votes and Voters The debate on election hacking is raging. In this talk, we discuss both Russian cyber operations and on weaknesses in the American electoral system. We will provide a framework for contextualizing recent Russian activity, adding greater context to today’s headlines. We will also discuss technical weaknesses in voting infrastructure that urgently need to be remediated and outline potential mechanisms for doing so. As part of this discussion, we’ll address the debate around the “critical infrastructure” designation and its benefits and limits. Speakers: Ben Buchanan is a Postdoctoral Fellow at Harvard University’s Cybersecurity Project, where he conducts research on the intersection of cybersecurity and statecraft. His first book, The Cybersecurity Dilemma, will be published by Oxford University Press in February 2017. Previously, he has written on attributing cyber attacks, deterrence in cyber operations, cryptography, election cybersecurity, and the spread of malicious code between nations and non-state actors. He received his PhD in War Studies from King’s College London, where he was a Marshall Scholar, and earned masters and undergraduate degrees from Georgetown University. Michael Sulmeyer is the Belfer Center's Cyber Security Project Director at the Harvard Kennedy School. He recently concluded several years in the Office of the Secretary of Defense, serving most recently as the Director for Plans and Operations for Cyber Policy. He was also Senior Policy Advisor to the Deputy Assistant Secretary of Defense for Cyber Policy. In these jobs, he worked closely with the Joint Staff and Cyber Command on a variety of efforts to counter malicious cyber activity against U.S. and DoD interests. |

| 2/3 | None |

| 2/10 | How Are You Tracked Online? When you browse the web, hidden “third parties” collect a large amount of data about your behavior. This data feeds algorithms to target ads to you, tailor your news recommendations, and sometimes vary prices of online products. The network of trackers comprises hundreds of entities, but consumers have little awareness of its pervasiveness and sophistication. In this talk I'll discuss the findings and experiences of the Princeton Web Transparency and Accountability Project , which continually monitors the web to uncover what user data companies collect, how they collect it, and what they do with it. We do this via a largely automated monthly “census” of the top 1 million websites, in effect “tracking the trackers”. Our tools and findings have proven useful to regulators and investigatory journalists, and have led to greater public awareness, the cessation of some privacy-infringing practices, and the creation of new consumer privacy tools. But the work raises many new questions. For example, should we hold websites accountable for the privacy breaches caused by third parties? I'll conclude with a discussion of such tricky issues and make recommendations for public policy and regulation of privacy. See paper at https://randomwalker.info/publications/webtap-chapter.pdf Speaker: Arvind Narayanan is an Assistant Professor of Computer Science at Princeton. He leads the Princeton Web Transparency and Accountability Project to uncover how companies collect and use our personal information. Narayanan also leads a research team investigating the security, anonymity, and stability of cryptocurrencies as well as novel applications of blockchains. He co-created a Massive Open Online Course as well as a textbook on Bitcoin and cryptocurrency technologies. His doctoral research showed the fundamental limits of de-identification, for which he received the Privacy Enhancing Technologies Award. Narayanan is an affiliated faculty member at the Center for Information Technology Policy at Princeton and an affiliate scholar at Stanford Law School's Center for Internet and Society. |

| 2/17 (K262) | The Perpetual Line-Up: Unregulated Use of Facial Recognition by the Police in America People often think of face recognition technology as some science fiction future. In reality, half of all American adults are in a police or FBI face recognition network. Police face recognition technology is much more pervasive than people realize -- yet it is not subject to any meaningful system of regulation. In this talk, Alvaro Bedoya will discuss The Perpetual Line-Up, the Center's recent year-long investigation into police use of face recognition. In a world where we have no (formal) reasonable expectation of privacy in public, what protections does the Fourth Amendment provide for your face and police technologies that track it? Does the First Amendment protect us against the scanning of faces in a protest, or does it also provide few protections? How should judges and policymakers address face recognition systems' apparent demographic bias in enrollment and accuracy? Speaker : Alvaro Bedoya is the founding Executive Director of Georgetown Law’s Center on Privacy & Technology. He is an expert on digital privacy issues, including face recognition, commercial data collection, and government surveillance, with a focus on their impact on communities of color. Prior to joining the Center, he served as Chief Counsel to Senator Al Franken and to the U.S. Senate Judiciary Subcommittee on Privacy, Technology and the Law. In that capacity, Alvaro advised Senator Franken on oversight investigations into major Internet and technology companies and in crafting geolocation privacy, cybersecurity and NSA reform legislation. Alvaro is a graduate of Harvard College and Yale Law School. |

| 2/24 | Law Is Code: Blockchain as a regulatory technology “Code is law” refers to the idea that, with the advent of digital technology, code has progressively established itself as the predominant way to regulate the behavior of Internet users. Yet, while computer code can enforce rules more efficiently than legal code, it also comes with a series of limitations, mostly because it is difficult to transpose the ambiguity and flexibility of legal rules into a formalized language which can be interpreted by a machine. The Blockchain, the decentralized technology behind Bitcoin, is getting plenty of attention as a new paradigm with multiple implications. In fact, with the blockchain-based "smart contracts", code is assuming an even stronger role in regulating people’s interactions over the Internet, as many contractual transactions get transposed into smart contract code. In this talk, I will discuss the shift from the traditional notion of “code is law” (i.e., code having the effect of law) to the new conception of “law is code” (i.e., law being defined as code). More in https://firstmonday.org/ojs/index.php/fm/article/view/7113 Speaker: Samer Hassan (PhD) is an activist and researcher, Fellow at the Berkman Center for Internet & Society (Harvard University) and Associate Professor at the Universidad Complutense de Madrid (Spain). Currently focused on decentralized collaboration, he has carried out research in decentralized systems, social simulation and artificial intelligence from positions in the University of Surrey (UK) and the American University of Science & Technology (Lebanon). Coming from a multidisciplinary background in Computer Science and Social Sciences, he has more than 45 publications in those fields. Engaged in free/open source projects, he co-founded the Comunes Nonprofit and the Move Commons webtool project. He's an accredited grassroots facilitator and has experience in multiple communities and grassroots initiatives. He's involved as UCM Principal Investigator in the EU-funded P2Pvalue project on building decentralized web-tools for collaborative communities and social movements. His research interests include Commons-based peer production, decentralized architectures, blockchain-based decentralized autonomous organizations, online communities, grassroots social movements & cyberethics. Follow Samer on Twitter: @samerP2P

|

| 3/3 | Human-in-the-loop rulesets for legalese, alerts, and access control Rulesets give a powerful knowledge capture formalism, but many problems resist full formalization. So we should aim for partial success. Rules are a means of knowledge capture, a next step after schemas and ontologies. But to reach their potential, we must devise ways to specify and operate “imperfect” systems, assisted by humans. This talk describes three ways that rulesets can partially automate customers’ routine tasks to support incremental and agile systems: 1) use a mix of rules, encapsulated English prose, and stored answers to express complex policies; 2) principled ways to automate part of the run time processing, so that rulesets can be flexible about what information they receive without re-programming; and 3) explaining to users what rules apply in their situation. Compliance is a natural initial application; eventually the approach might also be used for regulations and laws. Speaker: Arnon Rosenthal has consulted and published in data sharing and administration, databases, clouds, data security, policy based systems, and graph algorithms. He has (according to ResearchGate) 150+ publications, and 4000+ citations. His work tries to address many sides of a problem simultaneously, clarify and decompose the challenges, understand the pragmatics, simplify, and generalize components of a solution, for a realistically imperfect world. He has worked at The MITRE Corporation, and Computer Corporation of America, and Sperry Research, was a visiting researcher at IBM Almaden Research and ETH Zurich, and a faculty member at University of Michigan (Ann Arbor). He holds a Ph.D. from the University of California, Berkeley. |

| 3/10 | No talk - Spring Recess |

| 3/17 | No talk - Spring Recess |

| 3/24 | Algorithmic Accountability Machines are making a lot of decisions that used to be made by humans. Machines now help us make individual decisions, such as which news we read and the ads we see. They also make societal decisions, such as which neighborhoods get a heavier police presence and which receive more attention from political candidates. Journalist Julia Angwin talks about the challenges of holding machines accountable for their decisions. Read https://www.propublica.org/series/machine-bias Speaker: Julia Angwin is a senior reporter at ProPublica. From 2000 to 2013, she was a reporter at The Wall Street Journal, where she led a privacy investigative team that was a finalist for a Pulitzer Prize in Explanatory Reporting in 2011 and won a Gerald Loeb Award in 2010. Her book "Dragnet Nation: A Quest for Privacy, Security and Freedom in a World of Relentless Surveillance," was published by Times Books in 2014, and was shortlisted for Best Business Book of the Year by the Financial Times. Also in 2014, Julia was named reporter of the year by the Newswomen’s Club of New York. In 2003, she was on a team of reporters at The Wall Street Journal that was awarded the Pulitzer Prize in Explanatory Reporting for coverage of corporate corruption. She is also the author of “Stealing MySpace: The Battle to Control the Most Popular Website in America” (Random House, March 2009). She earned a B.A. in mathematics from the University of Chicago and an MBA from the Graduate School of Business at Columbia University. |

| 3/31 | None |

| 4/7 | Reducing the Spread of Fake News: Coordinating Humans to Nudge AI Behavior How can we we pro-socially influence machine behavior without access to code or training data? In a recent study, a community with 15m+ subscribers tested the effect of encouraging fact checking on the algorithmic spread of unreliable news, discovering that adjustments in the wording of this "AI nudge" could reduce the scores that shape the algorithmic spread of unreliable news by 2x. We found that we can persuade algorithms to behave differently by nudging people to behave differently. How can we think about the politics and ethics of systematically influencing black box systems from the outside? This AI nudge was conducted using CivilServant, novel software that supports communities to conduct their own policy experiments on human and machine behavior–independently of online platforms. In this talk, hear the results of our experiment on reducing the spread of unreliable news, alongside reflections on the history and future of democratic policy experimentation. Speaker: J. Nathan Matias is a Ph.D. candidate at the MIT Media Lab Center for Civic Media, an affiliate at the Berkman-Klein Center at Harvard, and founder of CivilServant. He conducts independent, public interest research on flourishing, fair, and safe participation online. His recent work includes research on online harassment prevention, harassment reporting, volunteer moderation online (PDF), behavior change toward equality (PDF), and online social movements (PDF). Nathan has extensive experience in tech startups, nonprofits, and corporate research, including SwiftKey, Microsoft Research, and the Ministry of Stories. His creative work and research have been covered extensively by international press, and he has published data journalism and intellectual history in the Atlantic, Guardian, PBS, and Boston Magazine. |

| 4/14 | Propaganda, Misinformation, "Fake News", and what to do about it Recently, "fake news" has become a term used daily by laymen and the President alike, to indicate lies and propaganda presented as news — but it is not a brand new phenomenon. Rather, its novelty lies in its omnipresence due to social media technologies. But how prevalent is fake news, how severe is the challenge it presents? In this talk we will first review the history of efforts to influence public opinion and elections through web spam and online social media. We will study examples of successful Google bombs and Twitter bombs that been detonated in cyberspace in the last decade. We will also describe our online rumor-monitoring system, twittertrails.com, which tracks the diffusion of rumors on Twitter. We will end by discussing why this problem, despite being enabled by and gaining prominence through online technologies, cannot be solved by technical approaches alone. Speaker: P. Takis Metaxas is a professor of computer science at Wellesley College and an affiliate at Harvard's Center for Research on Computation and Society (CRCS) and the Faculty Director of the Albright Institute for Global Affairs. Takis studies how the web is changing the way people think, decide, and act as individuals and members of social communities. Much of Takis’ research is in web science, an emerging interdisciplinary field that connects computer science to the social sciences and natural sciences. Media coverage about his work has appeared in The Washington Post, Science (podcast), The Atlantic, The Huffington Post, and MIT Technology Review, and many other mass media outlets.

|

| 4/21 | Open Data Privacy Playbook Cities today collect and store a wide range of data that may contain sensitive information about residents. As cities embrace open data initiatives, more of this information is released to the public. While opening data has many important benefits, sharing data comes with inherent risks to individual privacy: released data can reveal information about individuals that would otherwise not be public knowledge. This talk discusses privacy-protective approaches and processes that could be adopted by cities and other groups that are publicly releasing data. See Open Data Privacy Playbook. Speaker: Ben Green is a PhD Candidate studying Applied Mathematics at Harvard's School of Engineering and Applied Sciences and a Fellow at the Berkman Klein Center for Internet & Society. His primary areas of study are the uses of data and technology by city governments; the intersection of data, algorithms, and social justice; and the impacts of algorithms and technology on society. Ben is currently on leave for the 2016-2017 academic year on a fellowship to work for the City of Boston Analytics Team.

|

| 4/28 | The Weaponization of Tech Every day, millions of us log into our social media profiles without fully understanding that these sites aid in the manipulative abuse and destruction of people’s lives. It’s time to face the facts - technology is a very effective weapon for users to dox, leak, blackmail, humiliate, extort, abuse, and instigate violence against others. So why aren’t tech companies proactively dealing with it and protecting their loyal users? Speaker: Carrie Goldberg, Esq. is a victims’ rights lawyer in Brooklyn. She litigates for victims of online harassment, sexual assault and blackmail. She is most known for work involving revenge porn – representing victims in court, fighting for criminal and civil laws on a local, state and federal level, and agitating major tech companies. |

| 5/5 | None |

| 5/12 | Ethical Engineering Discussions surrounding privacy and data protection often focus on the interaction between society and the law. As the use of new technologies becomes more widespread, the law responds by bringing the associated information collection and use back in line with people's reasonable expectation of privacy. Such is the history of the Fourth Amendment, for instance, with regards to the content of telephone calls. But what the history of the Fourth Amendment also demonstrates is that this process if far from seamless as the law tends to woefully lag behind technological developments, resulting in a lack of legal clarity at best, if not a significant and prolonged gap in protections. This talk will focus on a commonly neglected aspect of this sociotechnical space: the role and responsibility of engineers and technologists. More specifically, drawing on my work as Privacy and Civil Liberties Engineer at Palantir Technologies, I will discuss how technologists can contribute to bridging the gap between social and legal developments – namely, by providing the tools for sensible self-regulation such that both commercial and government entities are empowered to address consumers' and citizens' privacy interests and civil liberties concerns in advance of constitutional and statutory modernization. Speaker: Paula Kift is a Privacy and Civil Liberties Engineer at Palantir Technologies. She joined the team upon graduating with a master's degree in Media, Culture, and Communication from New York University (NYU) in 2016, where she was also a Fellow at the Information Law Institute. Paula holds a BA summa cum laude in French, European Cultural Studies and Near Eastern Studies from Princeton University and a master's degree in public policy from the Hertie School of Governance in Berlin. Previously Paula worked as a research assistant at the Alexander von Humboldt Institute for Internet and Society (HIIG) and at the Global Public Policy Institute (GPPi) in Berlin. She is a member of the GPPi Circle of Friends and an Associate Member of the American Council on Germany (ACG). |

Prior Sessions

Fall 2016 | Spring 2016 | Fall 2015 | Spring 2014 | Fall 2013 | Spring 2013 | Fall 2012 | Spring 2012 | Fall 2011